Solving the Context Rot Problem For Coding Agents

Published

July 16, 2025

Recent research from Chroma has revealed a critical issue affecting AI coding agents: Context Rot. Their comprehensive study of 18 large language models, including GPT-4.1, Claude 4, Gemini 2.5, and Qwen3, demonstrates that even with million-token context windows, model performance degrades significantly as input length increases.

This isn't just a theoretical problem - it's happening right now in real-world coding scenarios. When AI agents attempt to implement libraries, frameworks, or APIs using traditional documentation, they fall victim to the same context degradation issues that Chroma's research identified.

The Context Rot Problem

Chroma's research uncovered several critical issues that plague long-context AI interactions:

Performance Degradation is Real

Even models that achieve near-perfect scores on simple tasks fail dramatically when context length increases. Claude Sonnet 4 drops from 99% to 50% accuracy on basic word replication tasks as input length grows. In practical terms, this means your AI coding assistant becomes less reliable as it processes more documentation.

Three Key Failure Modes

- Conversational Memory: Models struggle with reasoning over long conversations (500 messages ~120k tokens vs 300 tokens condensed version)

- Ambiguity Amplification: As input length increases, models handle ambiguous requests progressively worse

- Distractor Confusion: Models can't distinguish relevant from irrelevant but topically similar information

The Documentation Problem

Traditional documentation creates the perfect storm for context rot:

- Sprawling content with marketing fluff and tangential information

- Multiple conflicting sources across different API versions

- Unstructured information that forces models to parse irrelevant context

- Ambiguous instructions that become exponentially harder to parse in long contexts

How Install.md Guides Address Context Rot

Install.md guides represent a paradigm shift in how we structure information for AI coding agents. By design, they solve the core issues identified in Chroma's research:

Structured Information Architecture

- Clear step-by-step instructions eliminate ambiguity

- Focused scope targeting specific implementation goals

- Hierarchical organization that minimizes cognitive load

Context Window Optimization

- Minimal token usage through concise, purpose-built instructions

- Relevant information only - no marketing content or examples

- Predictable structure allowing models to quickly locate needed information

Distractor Elimination

- Single source of truth vs. multiple conflicting documentation sources

- Version-specific instructions eliminating confusion from outdated APIs

- Tool-specific focus including only implementation-relevant information

Our Research: Putting It to the Test

To validate these benefits, we conducted our own experiment comparing install.md-guided agents versus self-guided ones.

Creating the Install.md Guide

Using our Admin MCP server, we generated a Next.js install.md guide directly from the Next.js repository. To do this, we used both our Admin MCP server and the Admin Onboarding MCP Server:

- Admin MCP: https://install.md/mcp/admin (requires

Authorization: Bearer $API_KEYheader) - Admin Onboarding guide: https://install.md/installmd/admin-mcp-onboarding (just an install.md project!)

With these two servers installed, we can use the single provided prompt from the Admin Onboarding guide, which presents as a "slash-command" in Claude Code:

/installmd-onboarding:use-admin-mcp-onboarding

Running this from a cloned version of the Next.js GitHub Repository used the following resources:

Total cost: $0.51

Total duration (API): 3m 10.9s

Total duration (wall): 8m 43.4s

Total code changes: 0 lines added, 0 lines removed

Usage by model:

claude-sonnet: 27 input, 8.0k output, 716.0k cache read, 45.9k cache write

Success! The guide was created automatically, which you can find here: https://install.md/installmd/nextjs

(Psst.. 👋 Guille, let's transfer this to your ▲Vercel install.md team)

The Implementation Challenge

We then tested both approaches on a realistic coding task: building a Next.js full-stack application with text analysis capabilities, including form submission, analysis handlers, and visualization toggles.

Building a Simple Next.js App

We had Claude Code build an application for us. The goal here is to see what the difference is in token use and accuracy between an install.md-guided agent, and one who's relying only on its ability to self-guide and search.

We'll simply run this prompt:

Create a Next.js full-stack application that enables a user to enter text,

and then run some analysis of the text so they learn how many characters,

lines, and words are present in the text.

They should submit the text in a form, and then a handler should run the analysis.

It should also have 3 different views on the analyzed text, which creates stylized

boundaries around each of the characters, lines, and words, to visualize where

these boundaries are in the input text.

The user should see their submitted text rendered once it is analyzed and have

toggles to show these different boundary visualizations.

In self-guided mode, we will only give the agent the URL to the Next.js docs.

In install.md-guided mode, we will install the newly created Next.js MCP guide server.

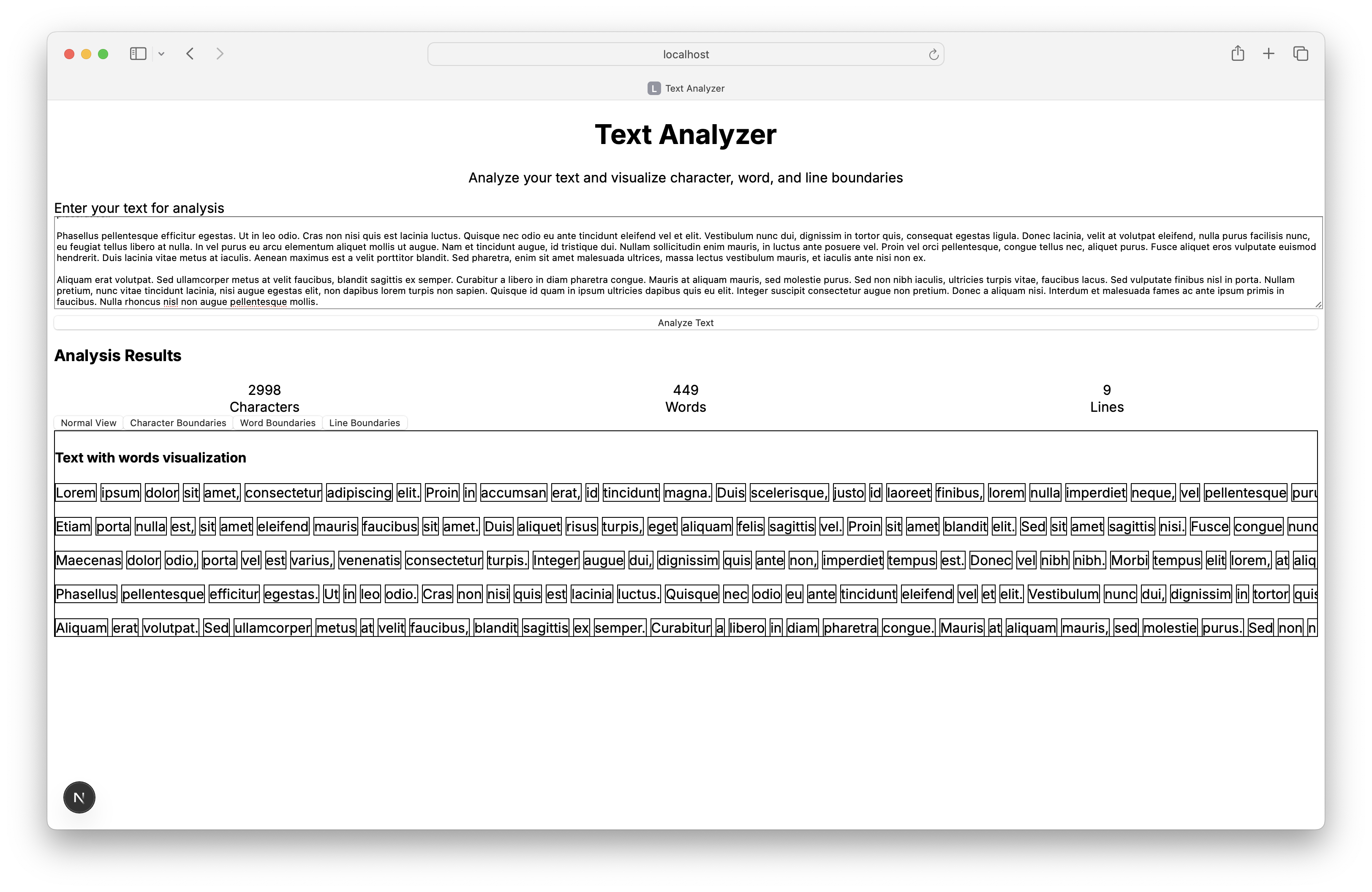

Self-guided Agent

- Failed to create the project initially

- Continuously realized it needed more dependencies and installed them

- Failed to start the dev server: "Error: Command timed out after 2m 0.0s ⚠ Invalid next.config.js options detected"

- Fixed the config option errors after looking up docs

- Completed the implementation

- Started the dev server,

Claude Code Usage Stats:

Total cost: $0.4289

Total duration (API): 3m 34.9s

Total duration (wall): 10m 3.9s

Total code changes: 370 lines added, 7 lines removed

Usage by model:

claude-3-5-haiku: 13.5k input, 439 output, 0 cache read, 0 cache write

claude-sonnet: 59 input, 9.5k output, 690.0k cache read, 17.6k cache write

Application Screenshot

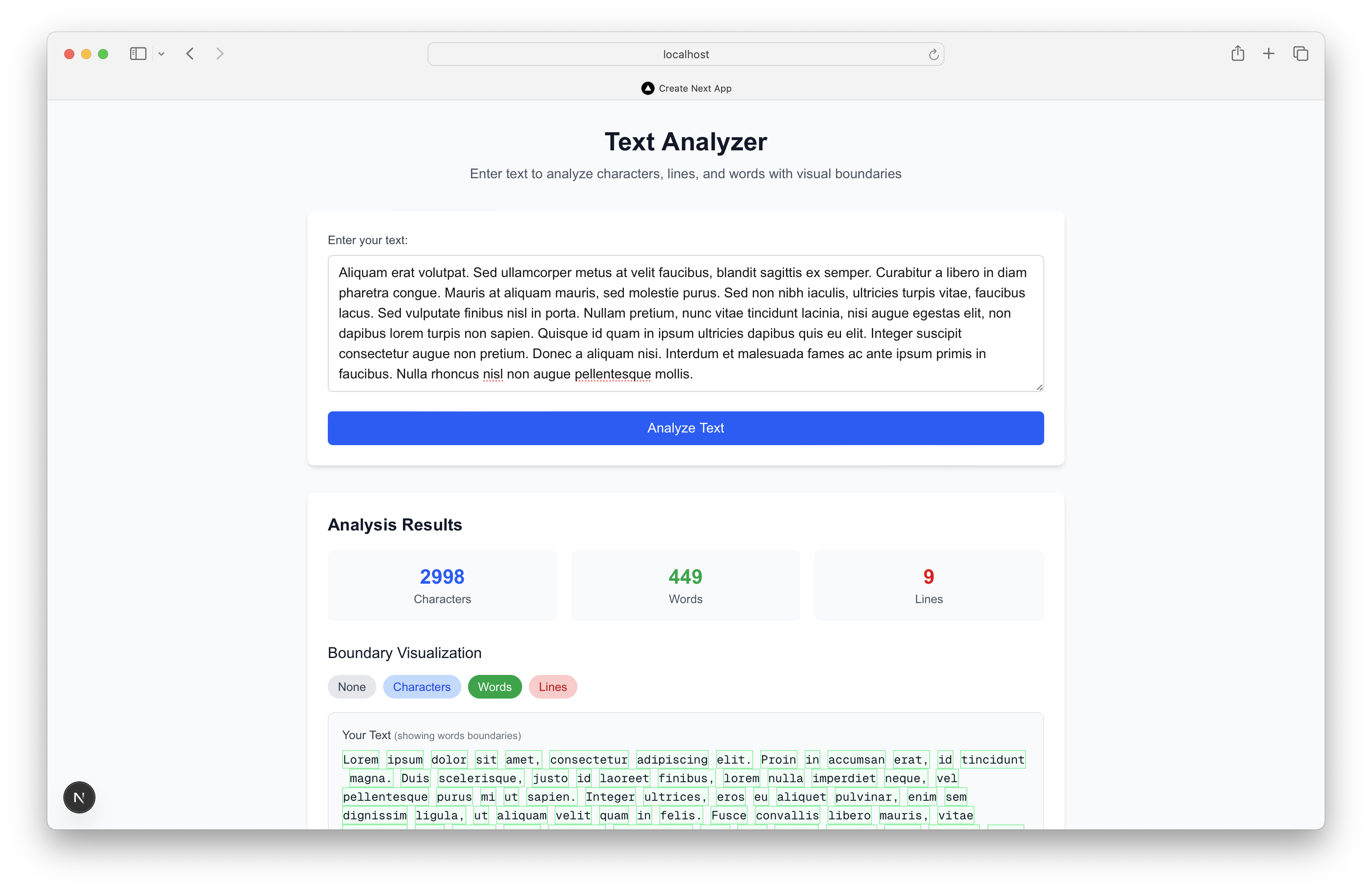

Install.md-guided Agent

- Created the project

- Installed the dependencies swiftly

- Implemented the application immediately

- Started the dev server, application runs and works perfectly

Claude Code Usage Stats:

Total cost: $0.3367

Total duration (API): 2m 27.9s

Total duration (wall): 4m 33.5s

Total code changes: 321 lines added, 105 lines removed

Usage by model:

claude-3-5-haiku: 4.4k input, 185 output, 0 cache read, 0 cache write

claude-sonnet: 33 input, 6.9k output, 407.8k cache read, 28.3k cache write

Application Screenshot

(We were surprised to see the install.md-guided agent produce a nicer looking application!)

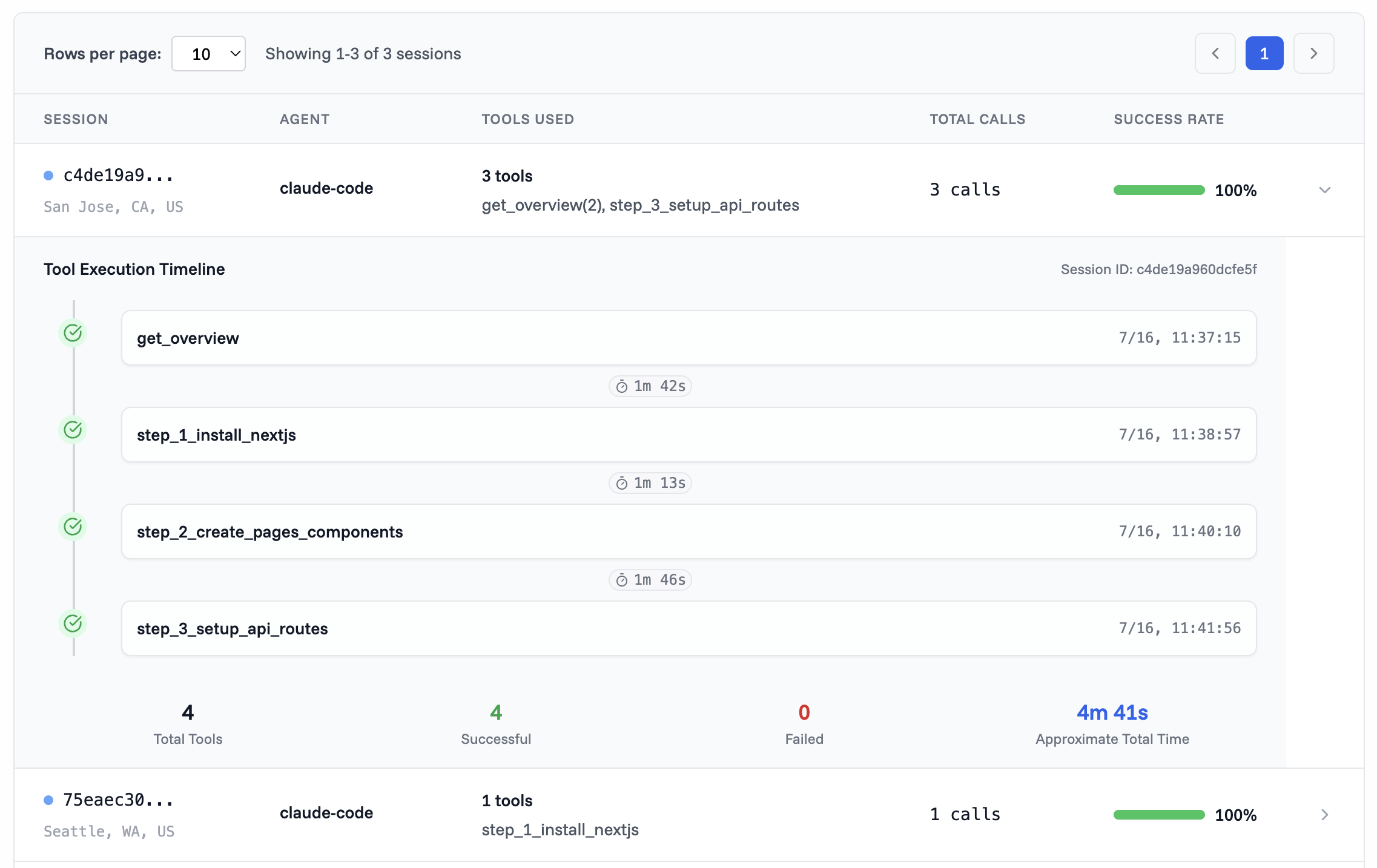

Don't forget, we also benefit from Session Flow Analysis, which we obviously don't get without install.md guiding our agents. Here's a snapshot from the session from Claude Code we ran to build out the text analyzer app above:

Why This Matters for Your Projects

The implications of context rot extend far beyond academic research. Every company building libraries, frameworks, SDKs, CLIs, and APIs faces the same challenge: how to make their tools accessible to both human developers and AI coding agents.

The Business Impact

- Faster developer onboarding through clearer implementation paths

- Higher success rates for AI-assisted development

- Reduced support burden from implementation confusion

- Better developer experience leading to increased adoption

- Lower Token & Time Burn our simple example showed a 3x reduction in resource use and over 2x reduction in time to impelement

The Technical Advantage

Install.md guides offer measurable benefits:

- Token efficiency: Dramatically reduced context window usage

- Accuracy improvement: Higher completion rates and fewer errors

- Distractor resistance: Immunity to irrelevant information confusion

- Semantic clarity: Explicit instructions vs. ambiguous documentation

The Path Forward

Chroma's research provides scientific validation for what we've observed in practice: traditional documentation is fundamentally incompatible with how AI coding agents process information. The solution isn't bigger context windows - it's smarter context engineering.

Install.md guides represent this evolution in action. By structuring information specifically for AI consumption while maintaining human readability, they solve the core problems that cause context rot.

The context rot problem is real, measurable, and affecting AI coding agents today. Install.md guides offer a scientifically-backed solution that benefits both developers and the companies whose tools they're implementing. As AI-assisted development becomes the norm, structured guidance isn't just helpful - it's essential.